|

INEX 2011 Books and Social Search Track

|

|

|

The evaluation results shown below are based on the official INEX 2011 SB topic set, consisting of 211 topics from the LibraryThing discussion groups. There are two separate sets of relevance judgements. The first set is derived from the suggestions from members of the discussion groups (identified by LT Touchstones). The second set is based on judgements from Amazon Mechanical Turk for 24 out of the 211 topics

These are the two official Qrels sets:

Qrels: inexSB2011.LT.qrels.official

| Run | nDCG@10 | P@10 | MRR | MAP |

|---|---|---|---|---|

| p4-inex2011SB.xml_social.fb.10.50 | 0.3101 | 0.2071 | 0.4811 | 0.2283 |

| p54-run4.all-topic-fields.reviews-split.combSUM | 0.2991 | 0.1991 | 0.4731 | 0.1945 |

| p4-inex2011SB.xml_social | 0.2913 | 0.1910 | 0.4661 | 0.2115 |

| p4-inex2011SB.xml_full.fb.10.50 | 0.2853 | 0.1858 | 0.4453 | 0.2051 |

| p54-run2.all-topic-fields.all-doc-fields | 0.2843 | 0.1910 | 0.4567 | 0.2035 |

| p62.recommandation | 0.2710 | 0.1900 | 0.4250 | 0.1770 |

| p54-run3.title.reviews-split.combSUM | 0.2643 | 0.1858 | 0.4195 | 0.1661 |

| p62.sdm-reviews-combine | 0.2618 | 0.1749 | 0.4361 | 0.1755 |

| p62.baseline-sdm | 0.2536 | 0.1697 | 0.3962 | 0.1815 |

| p62.baseline-tags-browsenode | 0.2534 | 0.1687 | 0.3877 | 0.1884 |

| p4-inex2011SB.xml_full | 0.2523 | 0.1649 | 0.4062 | 0.1825 |

| p4-inex2011SB.xml_amazon | 0.2411 | 0.1536 | 0.3939 | 0.1722 |

| p62.sdm-wiki | 0.1953 | 0.1332 | 0.3017 | 0.1404 |

| p62.sdm-wiki-anchors | 0.1724 | 0.1199 | 0.2720 | 0.1253 |

| p4-inex2011SB.xml_lt | 0.1592 | 0.1052 | 0.2695 | 0.1199 |

| p18.UPF_QE_group_BTT02 | 0.1531 | 0.0995 | 0.2478 | 0.1223 |

| p18.UPF_QE_genregroup_BTT02 | 0.1327 | 0.0934 | 0.2283 | 0.1001 |

| p18.UPF_QEGr_BTT02_RM | 0.1291 | 0.0872 | 0.2183 | 0.0973 |

| p18.UPF_base_BTT02 | 0.1281 | 0.0863 | 0.2135 | 0.1018 |

| p18.UPF_QE_genre_BTT02 | 0.1214 | 0.0844 | 0.2089 | 0.0910 |

| p18.UPF_base_BT02 | 0.1202 | 0.0796 | 0.2039 | 0.1048 |

| p54-run1.title.all-doc-fields | 0.1129 | 0.0801 | 0.1982 | 0.0868 |

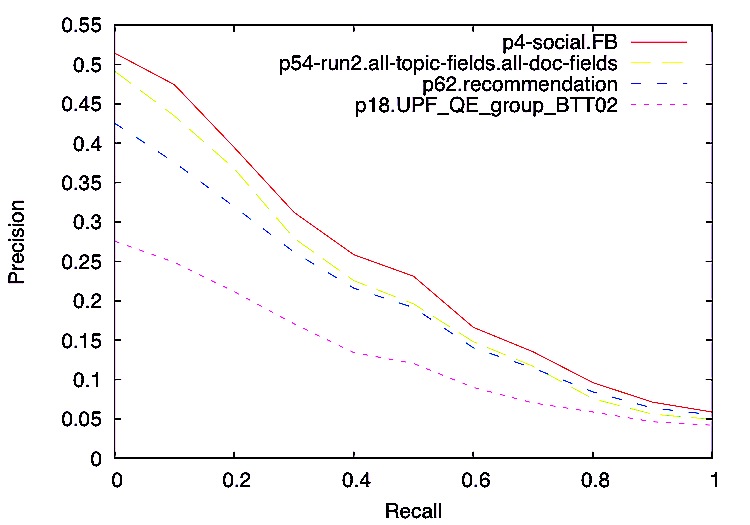

Precision-recall curves, best system per group:

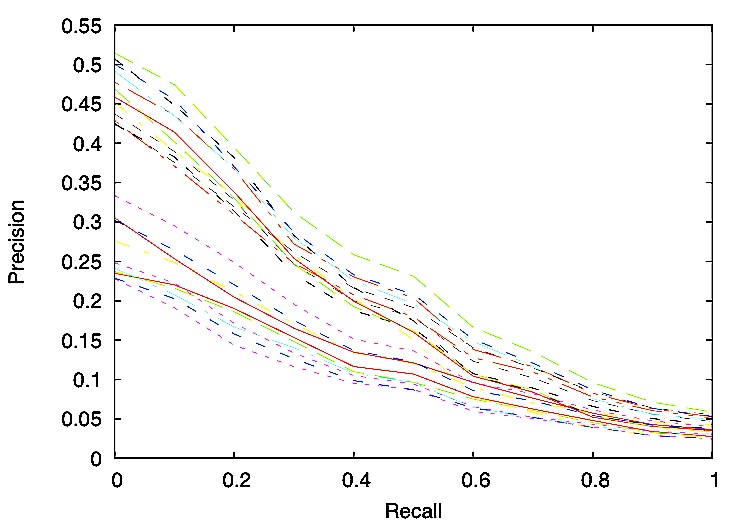

Precision-recall curves, all systems:

Qrels: inexSB2011.AMT.qrels.official

The results below are based on 24 of the 211 topics, with relevance judgements from Amazon Mechanical Turk, based on pools of the top 10 results of all official runs.

| Run | nDCG@10 | P@10 | MRR | MAP |

|---|---|---|---|---|

| p62.baseline-sdm | 0.6092 | 0.5875 | 0.7794 | 0.3896 |

| p4-inex2011SB.xml_amazon | 0.6055 | 0.5792 | 0.7940 | 0.3500 |

| p62.baseline-tags-browsenode | 0.6012 | 0.5708 | 0.7779 | 0.3996 |

| p4-inex2011SB.xml_full | 0.6011 | 0.5708 | 0.7798 | 0.3818 |

| p4-inex2011SB.xml_full.fb.10.50 | 0.5929 | 0.5500 | 0.8075 | 0.3898 |

| p62.sdm-reviews-combine | 0.5654 | 0.5208 | 0.7584 | 0.2781 |

| p4-inex2011SB.xml_social | 0.5464 | 0.5167 | 0.7031 | 0.3486 |

| p4-inex2011SB.xml_social.fb.10.50 | 0.5425 | 0.5042 | 0.7210 | 0.3261 |

| p54-run2.all-topic-fields.all-doc-fields | 0.5415 | 0.4625 | 0.8535 | 0.3223 |

| p54-run3.title.reviews-split.combSUM | 0.5207 | 0.4708 | 0.7779 | 0.2515 |

| p62.recommandation | 0.5027 | 0.4583 | 0.7598 | 0.2804 |

| p54-run4.all-topic-fields.reviews-split.combSUM | 0.5009 | 0.4292 | 0.8049 | 0.2331 |

| p62.sdm-wiki | 0.4862 | 0.4458 | 0.6886 | 0.3056 |

| p18.UPF_base_BTT02 | 0.4718 | 0.4750 | 0.6276 | 0.3269 |

| p18.UPF_QE_group_BTT02 | 0.4546 | 0.4417 | 0.6128 | 0.3061 |

| p18.UPF_QEGr_BTT02_RM | 0.4516 | 0.4375 | 0.6128 | 0.2967 |

| p54-run1.title.all-doc-fields | 0.4508 | 0.4333 | 0.6600 | 0.2517 |

| p18.UPF_base_BT02 | 0.4393 | 0.4292 | 0.6236 | 0.3108 |

| p62.sdm-wiki-anchors | 0.4387 | 0.3917 | 0.6445 | 0.2778 |

| p18.UPF_QE_genre_BTT02 | 0.4353 | 0.4375 | 0.5542 | 0.2763 |

| p4-inex2011SB.xml_lt | 0.3949 | 0.3583 | 0.6495 | 0.2199 |

| p18.UPF_QE_genregroup_BTT02 | 0.3838 | 0.3625 | 0.5966 | 0.2478 |

Qrels: inexSB2011.LT.AMT_selected.qrels.official

The results below are based on 24 of the 211 topics, with relevance judgements from Amazon Mechanical Turk, based on pools of the top 10 results of all official runs.

| Run | nDCG@10 | P@10 | MRR | MAP |

|---|---|---|---|---|

| p4-inex2011SB.xml_social.fb.10.50 | 0.3039 | 0.2120 | 0.5339 | 0.1994 |

| p54-run2.all-topic-fields.all-doc-fields | 0.2977 | 0.1940 | 0.5225 | 0.2113 |

| p4-inex2011SB.xml_social | 0.2868 | 0.1980 | 0.5062 | 0.1873 |

| p54-run4.all-topic-fields.reviews-split.combSUM | 0.2601 | 0.1940 | 0.4758 | 0.1515 |

| p4-inex2011SB.xml_full.fb.10.50 | 0.2323 | 0.1580 | 0.4450 | 0.1579 |

| p62.recommandation | 0.2309 | 0.1720 | 0.4126 | 0.1415 |

| p54-run3.title.reviews-split.combSUM | 0.2134 | 0.1720 | 0.3654 | 0.1261 |

| p62.sdm-reviews-combine | 0.2080 | 0.1500 | 0.4048 | 0.1352 |

| p4-inex2011SB.xml_full | 0.2037 | 0.1460 | 0.3708 | 0.1349 |

| p62.baseline-sdm | 0.2012 | 0.1440 | 0.3671 | 0.1328 |

| p62.baseline-tags-browsenode | 0.1957 | 0.1300 | 0.3575 | 0.1395 |

| p4-inex2011SB.xml_amazon | 0.1865 | 0.1340 | 0.3651 | 0.1261 |

| p62.sdm-wiki | 0.1575 | 0.1120 | 0.2849 | 0.1090 |

| p4-inex2011SB.xml_lt | 0.1550 | 0.0960 | 0.3057 | 0.0994 |

| p62.sdm-wiki-anchors | 0.1331 | 0.0940 | 0.2497 | 0.0930 |

| p18.UPF_QE_group_BTT02 | 0.1073 | 0.0720 | 0.2133 | 0.0850 |

| p18.UPF_QE_genregroup_BTT02 | 0.0984 | 0.0660 | 0.1956 | 0.0743 |

| p18.UPF_base_BT02 | 0.0942 | 0.0540 | 0.1988 | 0.0823 |

| p18.UPF_QEGr_BTT02_RM | 0.0912 | 0.0620 | 0.1915 | 0.0660 |

| p54-run1.title.all-doc-fields | 0.0907 | 0.0680 | 0.1941 | 0.0607 |

| p18.UPF_base_BTT02 | 0.0808 | 0.0500 | 0.1570 | 0.0688 |

| p18.UPF_QE_genre_BTT02 | 0.0775 | 0.0580 | 0.1440 | 0.0611 |

If you have questions, please send an email to marijn.koolen@uva.nl.